Table of Contents

The A/B Testing Framework

That Built a $10M Growth Engine

The systematic approach to experimentation that led to 100+ successful tests and measurable growth.

Summarized for you

Most growth teams waste resources testing trivial changes while ignoring systematic approaches that drive breakthrough results. This guide reveals the RISER methodology (Research, Ideate, Structure, Execute, Review) that generated 100+ winning tests and built a $10M growth engine. Learn the testing hierarchy pyramid that prioritizes high-impact experiments, statistical design principles that ensure reliable results, and advanced frameworks including sequential testing and multi-armed bandits that separate growth leaders from button-color optimizers.

Ready to transform random testing into systematic growth experimentation?Let's build your A/B testing framework →

Most growth teams are running A/B tests wrong. They're testing button colors while their value proposition is broken. They're celebrating 2% lifts while leaving 40% improvements on the table. They're making decisions on incomplete data that leads them further from breakthrough growth.

After running over 100 A/B tests across SaaS and e-commerce brands—helping scale companies from zero to $2.4M ARR and doubling revenue in 90-day sprints—I've learned that successful experimentation isn't about testing more. It's about testing smarter.

The framework I'm sharing today is the systematic approach that separated winning growth strategies from expensive guesswork. It's how I consistently identify tests that move the needle and avoid the vanity metrics that waste time and budget.

The Pyramid of Test Impact: Where Most Teams Go Wrong

Every A/B test isn't created equal. Most growth teams start at the bottom of the impact pyramid, testing superficial elements while ignoring fundamental conversion drivers.

Core Value Proposition

Conversion Psychology

User Experience Flow

Visual Design Elements

Micro-Optimizations

Real Impact Example

I once increased conversions by 203% for a B2B SaaS client by testing Level 1 elements—changing "The Best CRM for Small Business" to "The CRM That Actually Gets Used." Meanwhile, their previous agency spent months testing button colors for 0.3% lifts.

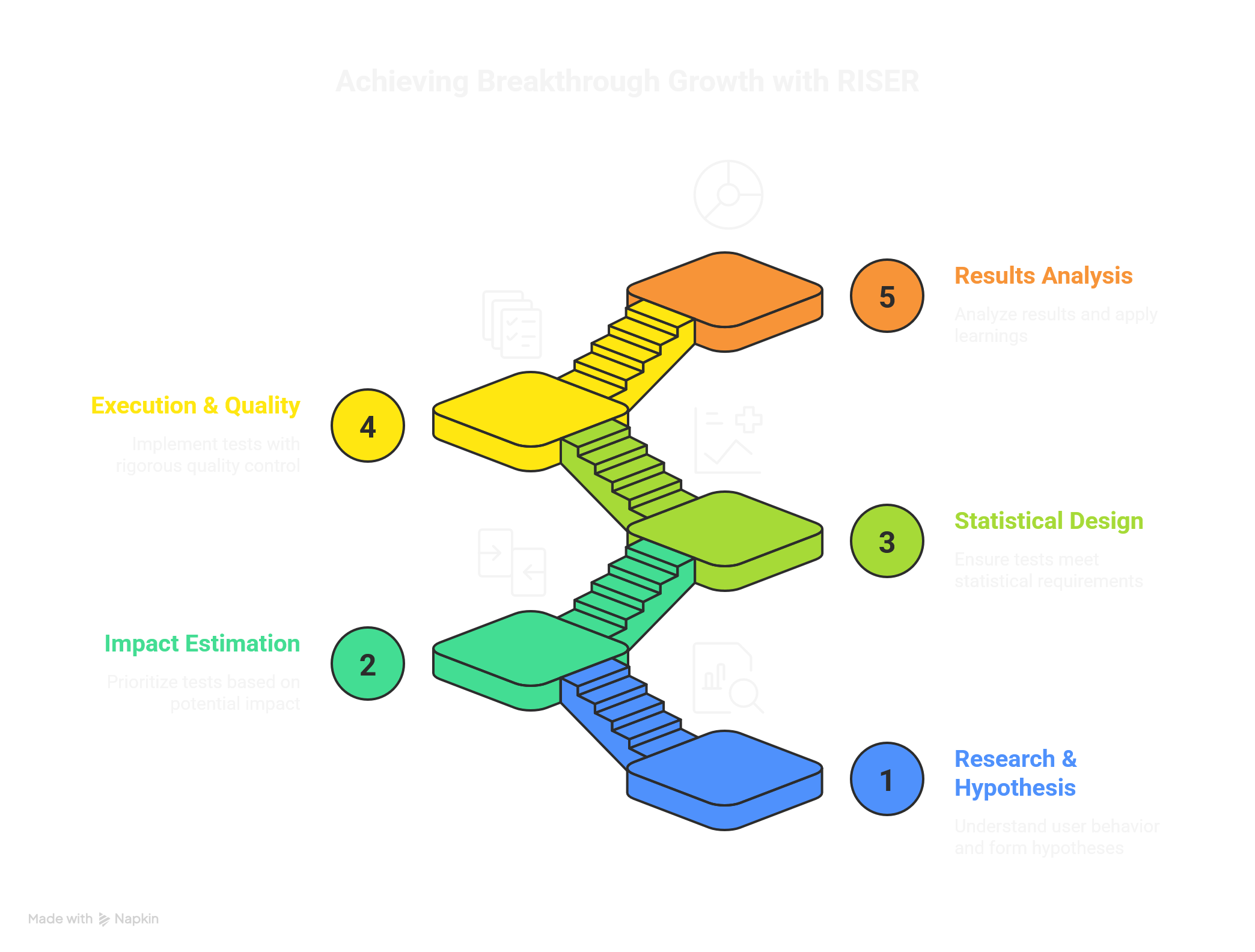

The RISER Framework: My 5-Step Testing Process

Random testing leads to random results. Breakthrough growth comes from systematic experimentation that builds on previous learnings and compounds over time.

Research & Hypothesis Formation

Every test starts with understanding why users aren't converting

Quantitative Analysis

Hypothesis Structure

"If we [specific change], then [target audience] will [desired behavior] because [psychological reasoning]."

Example:

"If we lead with customer ROI results instead of feature lists, then trial prospects will sign up at higher rates because B2B buyers care more about outcomes than capabilities."

Impact Estimation

Not all tests are worth running. Prioritize based on potential impact versus complexity

Scoring System (1-5 scale each)

How much could this move the needle?

How quickly can we build and launch?

How much will results inform future tests?

Statistical Design

Most teams run tests too short or with insufficient sample sizes

Statistical Requirements

5% chance results are due to random chance

20% chance of missing real improvements

Accounts for weekly behavior cycles

Case Study: Statistical Rigor Pays Off

For a client's pricing page test, this meant running 2,847 visitors per variation over 14 days instead of their original plan of 500 visitors over 3 days.

improvement caught that would have been invisible in their truncated test

Execution & Quality Control

Perfect test design means nothing if implementation is flawed

Technical Validation

Quality Assurance

Quality Control Warning

I've seen tests invalidated by implementation errors that weren't caught until after thousands of visitors had been exposed. Quality control isn't optional—it's the foundation of reliable results.

Results Analysis & Implementation

Most teams stop at "Version B won." I dig deeper to understand why it won

Analysis Framework

Compound Learning Example

When a headline test increased trial sign-ups by 89% for a project management SaaS, I didn't just implement the winner.

"Advanced Project Management Platform"

"Ship Projects 40% Faster"

Applied pain-first positioning across entire funnel → 156% improvement in MQLs within 60 days

Advanced Testing Strategies That Multiply Results

Once you master the basics, advanced testing techniques can unlock exponential improvements that basic A/B tests miss.

Sequential Testing for Compound Growth

Instead of isolated tests, I run sequences where each test builds on previous winners. This creates compound improvements that far exceed individual test results.

Example Sequence for E-commerce Product Pages:

Total conversion rate improvement

Multi-Page Experience Testing

Most teams test individual pages, but customers experience entire funnels. Testing page sequences often reveals bigger opportunities than page-level optimization.

DTC Brand Success Story

Tested the complete ad → landing page → checkout experience as integrated variations instead of separate elements. The holistic approach identified friction points that page-level testing missed.

improvement in ad-to-purchase conversion

Audience-Specific Optimization

Different customer segments respond to different messaging and experiences. Instead of one-size-fits-all pages, I test variations optimized for specific audience characteristics.

Segmentation Strategies:

A B2B SaaS client saw 67% better results by testing different homepage experiences for first-time visitors versus returning prospects.

Building a Testing Culture That Scales Growth

Individual tests create temporary lifts. Testing cultures create sustained competitive advantages. The brands I work with that achieve exceptional growth don't just run tests—they build experimentation into their DNA.

Elements of High-Performing Testing Cultures

Hypothesis Documentation

Every test starts with clear predictions and reasoning

Learning Libraries

Failed tests teach as much as winners when properly documented

Cross-Team Integration

Marketing, product, and engineering aligned on testing priorities

Long-Term Thinking

Tests designed to inform strategy, not just tactical optimization

Culture Success Story

I helped one client build a testing culture that generated 34 winning optimizations in their first year, creating a compounding growth effect that increased their customer acquisition efficiency by 189%.

Year 1 Results:

Winning optimizations

CAC efficiency increase

Key Insight:

The compound learning effect meant their testing velocity and impact increased dramatically over time.

Why My Framework Delivers Breakthrough Results

Most growth consultants talk about testing. I've actually built the systems that drive measurable results at scale. My framework isn't theoretical—it's battle-tested across dozens of companies and millions in generated revenue.

What Makes My Approach Different

Technical Foundation

I implement proper tracking and statistical controls that ensure test reliability. Too many "winning" tests are based on flawed data collection.

Strategic Context

Every test connects to broader growth objectives. I optimize for customer lifetime value and sustainable unit economics, not just conversion rate vanity metrics.

Full-Funnel Integration

Tests don't happen in isolation. I consider how landing page changes affect email sequences, how pricing tests impact customer quality, and how product changes influence retention.

Proven Results Across Industries

AlphayMed

Testing-driven optimization contributed to $2.4M ARR growth

MiraPet

Strategic experimentation delivered 2x revenue increase in 90 days

Multiple SaaS Clients

Consistent 40-200% improvements in key conversion metrics

Compound Learning Advantage

Each test informs the next, creating a knowledge base that accelerates future optimization. Clients often see their testing velocity and impact increase dramatically over time.

Companies that master systematic A/B testing don't just optimize—they innovate faster than competitors. They turn market insights into measurable advantages while others guess their way through growth challenges.

Ready to Build Your Growth Testing Engine?

Random optimization creates random results. Breakthrough growth requires systematic experimentation that turns insights into scalable advantages.

I help growth-focused companies build A/B testing frameworks that deliver consistent, measurable improvements across their entire funnel. From hypothesis formation to statistical analysis to compound optimization strategies, I create the systematic foundation that transforms testing from a tactic into a competitive advantage.

Build Your Testing FrameworkFilip Jankovic is a full-stack growth and product leader who has run over 100 successful A/B tests while driving $10M+ in measurable revenue growth. He specializes in systematic experimentation frameworks that help SaaS and e-commerce brands build sustainable competitive advantages through data-driven optimization.

Frequently Asked Questions About A/B Testing

How long should you run an A/B test?

A/B tests should run for at least 2-4 weeks to account for weekly patterns and achieve statistical significance. Ensure you have at least 1,000 conversions per variation for reliable results.

What is statistical significance in A/B testing?

Statistical significance (typically 95% confidence) indicates your test results are likely not due to chance. This means there's only a 5% probability the observed difference occurred randomly.

What should you A/B test first on your website?

Start testing high-impact elements like headlines, call-to-action buttons, value propositions, and pricing displays. These typically yield the highest conversion improvements with minimal development effort.

How many variations can you test simultaneously?

For reliable results, test 2-4 variations maximum. More variations require exponentially more traffic to reach statistical significance and can dilute your findings.

What traffic do you need for effective A/B testing?

You need at least 1,000 visitors per variation monthly for meaningful results. Low-traffic sites should focus on larger changes and longer test periods to achieve statistical significance.

What are the most common A/B testing mistakes?

Common mistakes include stopping tests too early, testing too many variables at once, not accounting for external factors, and making decisions based on insufficient data or statistical power.

People also read

The Complete Guide to E-commerce Conversion Rate Optimization

Learn the proven strategies that helped me increase conversion rates by 128% for medical device brands.

Building High-Converting Landing Pages for SaaS

The anatomy of landing pages that convert visitors into trial users and paying customers.

Customer Lifetime Value Optimization Tactics

Proven strategies to increase CLV through retention, upselling, and customer experience improvements.